Authored by Tony Feng

Created on Jan 27th, 2022

Last Modified on Jan 27th, 2022

Intro

This sereis of posts contains a summary of materials and readings from the course CSCI 1430 Computer Vision that I’ve taken @ Brown University. This course covers the topics of fundamentals of image formation, camera imaging geometry, feature detection and matching, stereo, motion estimation and tracking, image classification, scene understanding, and deep learning with neural networks. I posted these “Notes” (what I’ve learnt) for study and review only.

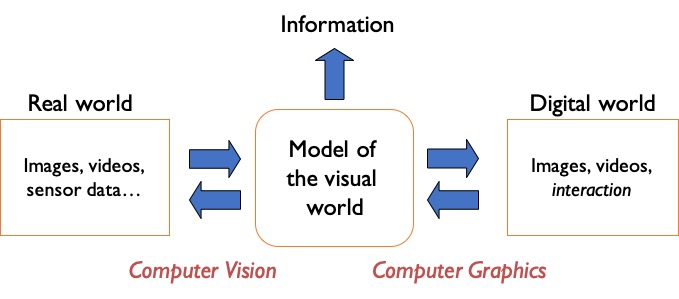

What is Computer Vision

3R Concept

Jitendra Malik @ UC Berkeley has stated that the classic problems of computational vision are:

- Reconstruction

- Recognition

- Re-organization

CV & Nearby Fields

What is an Image

Signal

Signal is a (multi-dimensional) function that contains information about a phenomenon – light, heat, gravity, population distribution, etc.

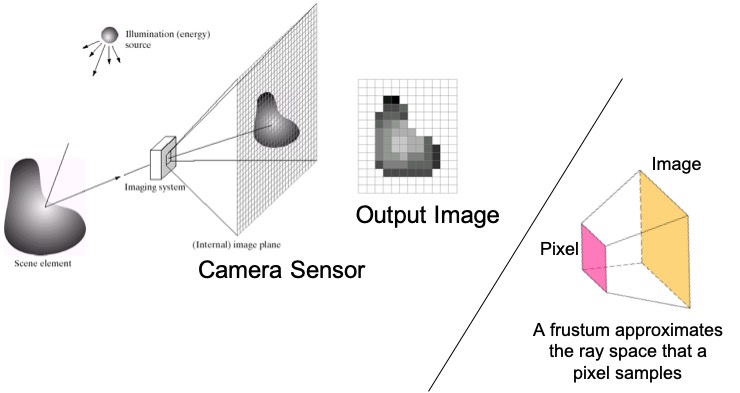

Light reflected from an object creates a continuous signal that is measured by cameras. Natural signals are continuous, but our measurements of them are discrete.

Sampling

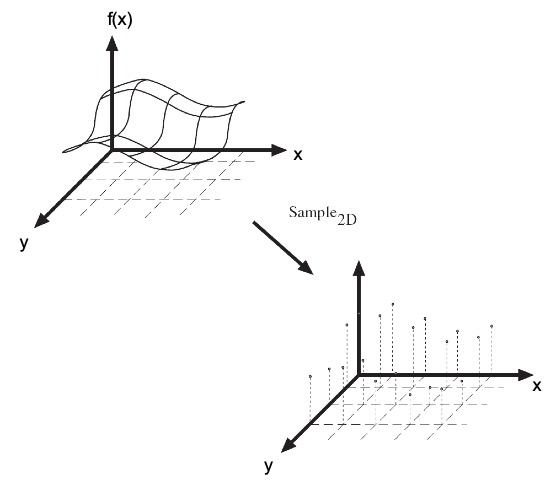

It is a process of reduction of continuous signal to a discrete signal.

Sampling in 1D takes a function and returns a vector whose elements are values of that function at the sample points, while Sampling in 2D takes a function and returns a matrix.

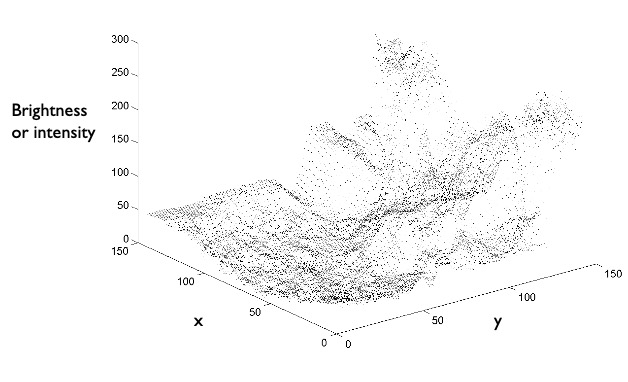

A 2D image is a sampling of a 2D signal. Note that the 2D signal can also be a projection (or slice) of a higher-dimensional signal like in MRI or CT scans. An image stores brightness/intensity along $x$ and $y$ dimensions, while a video contains time-varying 2D signals.

Pixel

Pixel stands for picture element and each associated with a value. We can approximate a pixel as a square frustum.

The diagram above arguably has an error (for convenience). The scene element should be flipped as projection onto the camera plane typically turns the image upside down.

Quantization

The function itself is continuous over the amount of light. When we convert it into a digital image, we need to take a continuous signal and turn it into a discrete set of intensity values to store in our image.

After quantization, the original signal cannot be reconstructed anymore. This is in contrast to sampling, as a sampled but not quantized signal can be reconstructed.

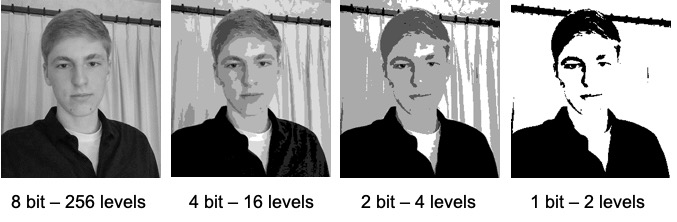

Quantization Effects – Radiometric Resolution

We often call this bit depth. For photography, this is also related to dynamic range, the minimum and maximum range of light intensity that can be measured/perceived/represented/displayed.

Color

We handle color by having three arrays, one for each of red, green or blue color channels. Combining the channels gives a color image. Practical matters when dealing with images in Python. We deal with color as an additional dimension in our arrays.