Authored by Tony Feng

Created on June 23rd, 2023

Last Modified on July 2nd, 2023

Summary

Title: Adversarial Examples that Fool both Computer Vision and Time-Limited Humans

Source: NeurIPS 2018

Research Questions

- The paper aims to answer whether human visual system will be influenced by adversarial examples that fool computer vision models.

How

- Constructing adversarial examples by targeted attack without access to the model’s architecture or parameters.

- Adapting machine learning models to mimic the initial visual processing of humans.

- Evaluating classification decisions of human observers in a time-limited setting.

Findings

- We find that adversarial examples that strongly transfer across computer vision models influence the classifications made by time-limited human observers.

Background

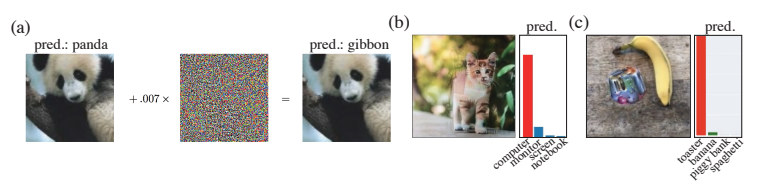

- Adversarial examples can fool computer vision models through subtle image modifications, even transferring across different models to attack inaccessible targets.

- While humans also exhibit errors to certain inputs (e.g. optical illusions, cognitive biases), these generally do not resemble adversarial examples. Yet no thorough empirical investigation has yet been performed.

- Recent research shows that CNNs and the primate visual system exhibit similar representation and behavior. This suggests the potential transfer of adversarial examples from computer vision models to humans.

- Studying the above question benefits both machine learning and neuroscience, facilitating knowledge exchange between the two fields.

- If the human brain can resist certain adversarial examples, it would imply the existence of a similar mechanism in machine learning security.

- If the brain can be fooled by adversarial examples, machine learning security research should focus on designing secure systems despite non-robust components.

- Studying how adversarial examples affect the brain can enhance our understanding of brain function.

Methods

ML Vision Pipeline

- Combining ImageNet images into six coarse categories in three groups: Pets (dog, cat), Vegetables (broccoli, cabbage), Hazard (spider, snake)

- Training ensemble of 10 CNN models with an additional retinal layer to better match early human visual processing (e.g. spatial blurring)

- Generating targeted adversarial examples that strongly transfer across models

Human Psychophysics Experiment

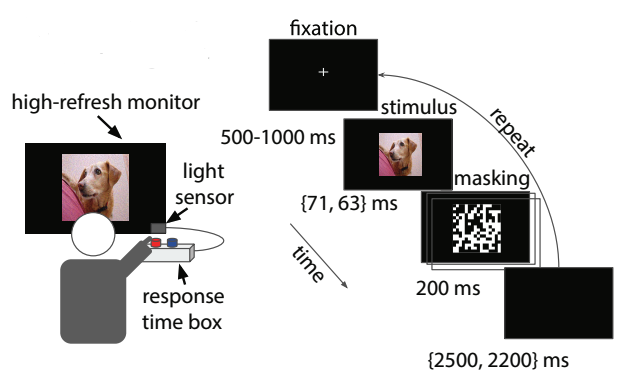

- 38 subjects classified images into two classes while seated in a fixed chair (two alternative forced choice).

- Images were displayed for 63ms after a brief fixation period, followed by ten high contrast binary random masks (each lasting 20ms).

- Participants had a maximum of 2200ms after the mask disappeared to respond, facilitating the detection of even subtle effects on perception.

- Each session focused on one of the image groups (Pets, Vegetables, Hazard), with images falling into one of four conditions.

image: images from ImageNet rescaled to [40, 215] to prevent clipping when perturbations are added.adv: images with adversarial perturbations $\delta_{adv}$ added that made models output the opposite class in the group.flip: images $\delta_{adv}$ with flipped vertically before being added. This is a control condition.false: images from ImageNet outside of the two classes in the group, but perturbed towards one of the classes in the group,

Results

Adversarial Examples Transfer to Computer Vision Model

- Adversarial examples tested on two new models successful for

advandfalseconditions, whileflipimages had little effect (validating its use as control).

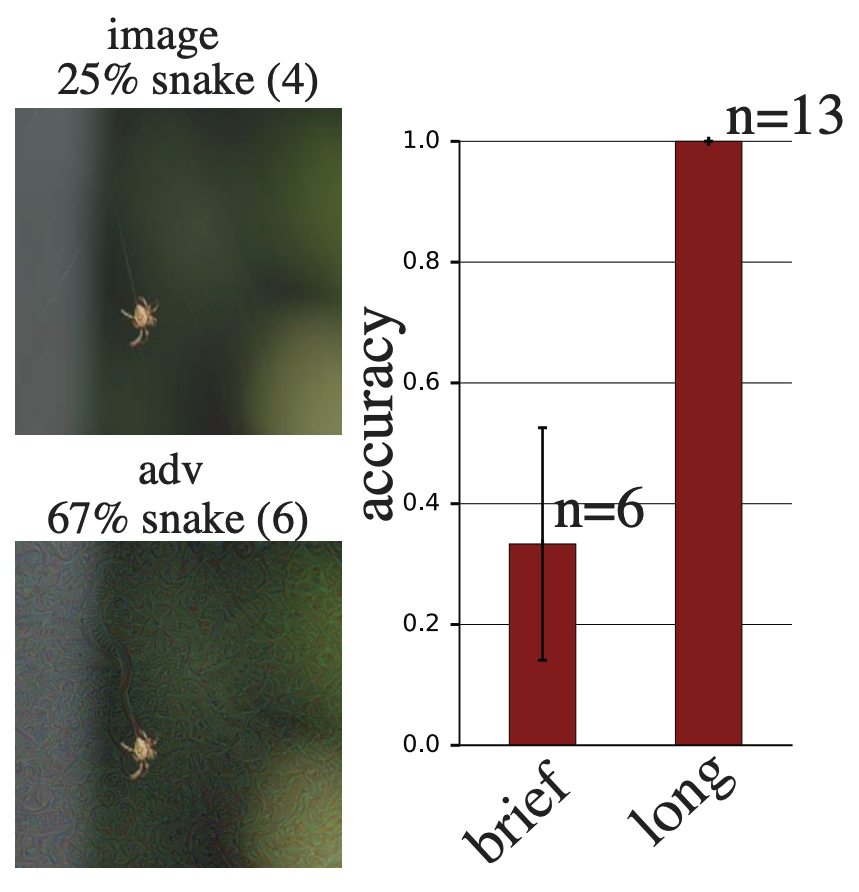

Adversarial Examples Transfer to Humans

- In the

falsecondition, adversarial perturbations successfully biased human decisions in all three groups by 1%~5%. Response time was inversely correlated to the perceptual bias pattern. - In the other setting, humans had similar accuracies on

imageandflipconditions, while being 7% less accurate onadvimages

Discussion

- Without a time limit, humans still get the correct class, suggesting that:

- The adversarial perturbations do not change the “true class”.

- Recurrent connections could add robustness to adversarial examples.

- Adversarial examples do not transfer well from feed-forward to recurrent networks

- Qualitatively, perturbations seem to work by modifying object edges, modifying textures, and taking advantage of dark regions (lager perceptual change for given size perturbation)

- Results open up a lot of questions for further investigation:

- How does transfer depend on $\epsilon$?

- Can retinal layer be removed?

- How crucial is the model ensembling?

Questions

Why time-limited settings?

- Brief image presentations prevent humans from achieving perfect accuracy, and even small changes in performance result in noticeable changes in accuracy.

- Limited time for image processing restricts the utilization of recurrent and top-down processing pathways in the brain. This leads to the brain’s processing resembling that of a feedforward artificial neural network.

How to control the degree of purtubations?

- We selected a perturbation size that is noticeable on a computer screen but small in relation to the image intensity scale.

- Specifically, we chose a large value for $\delta_{adv}$ to increase the likelihood of adversarial examples transferring to time-limited humans, while ensuring that the perturbations preserved the image’s class according to judgments made by humans without time constraints.