Authored by Tony Feng

Created on Nov. 22th, 2022

Last Modified on Nov. 22th, 2022

Intro

This sereis of posts contains notes from the course Self-Driving Fundamentals: Featuring Apollo published by Udacity & Baidu Apollo. This course aims to deliver key parts of self-driving cars, including HD Map, localization, perception, prediction, planning and control. I posted these “notes” (what I’ve learnt) for study and review only.

Computer Vision for AD

Detection

Applications

- Find all pedestrians in the driving record image.

- Localize the position of the traffic light in an image.

Alogorithm

Classification

Applications

- Determine if the obstacle in a picture is a pedestrian or a biker.

- Recognize the status of a traffic light.

Tracking

Applications

- Mark a dangerously driven vehicle on the road.

- Differentiating multiple cars on the road in a sequence of continuous driving record frames.

Why tracking?

- Tracking handles occlusion.

- Tracking preserves identity.

- Tracking could be combined with a predictive algorithm to predict the future behavior of a vehicle.

Segmentation

Applications

- Determine which pixels in the image captured by the camera correspond to the travelable area.

- Distinguish lanes and road signs in driving record images

Alogorithm

Camera Images & LiDAR Images

Camera Images

- Width * Height * Depth

- Pixel $\leftarrow$ (R, G, B)

LiDAR Images

- LiDAR Pulses $\leftarrow$ Point Cloud Representation

- Shape and Surface Texture

- Spatial Info

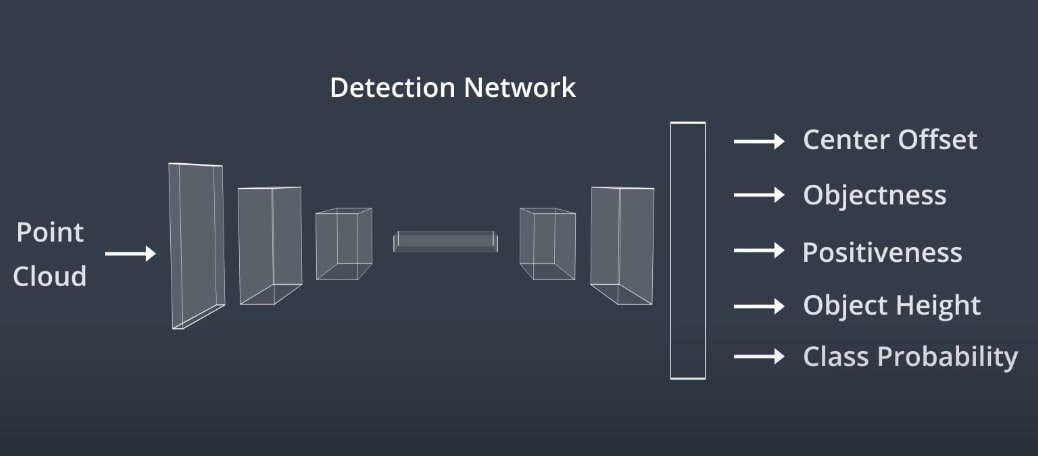

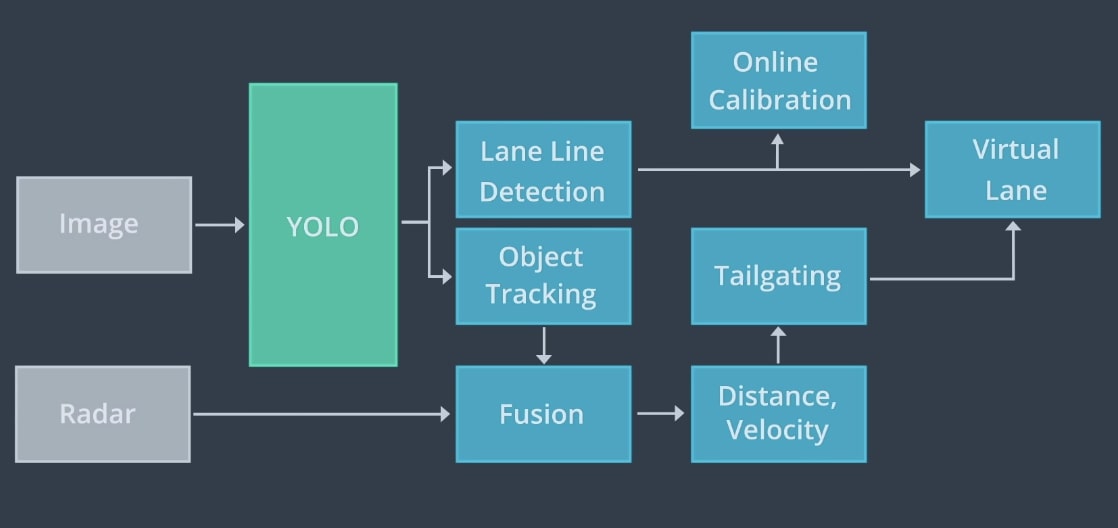

Appllo Perception

Obstacle Perceptioin

- ROI Filtering

- 3D Object Detection

- Detection to Track Association

Traffic Lights Detection

- HD Map $\leftarrow$ Location of the light

- Detection & Classification

- Matching multiple lights with lane lines

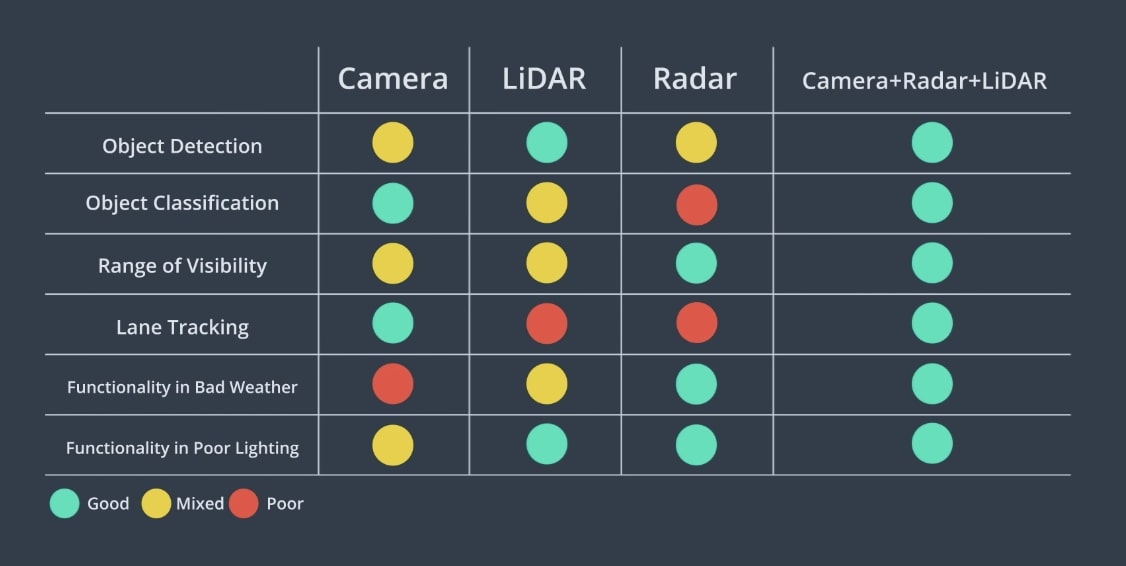

Sensor Data Comparisons

Radar

- Wavelength in mm

- Can sense non-line of sight objects

- Can currently directly measure velocity

- Low Resolution

LiRAR

- Wavelength in infrared

- Higher Resolution

- Most affected by dirt and small debris

Perception Fusion Strategy