Authored by Tony Feng

Created on Oct 11th, 2022

Last Modified on Oct 11th, 2022

Intro

This sereis of posts contains a summary of materials and readings from the course CSCI 1460 Computational Linguistics that I’ve taken @ Brown University. The class aims to explore techniques regarding recent advances in NLP with deep learning. I posted these “Notes” (what I’ve learnt) for study and review only.

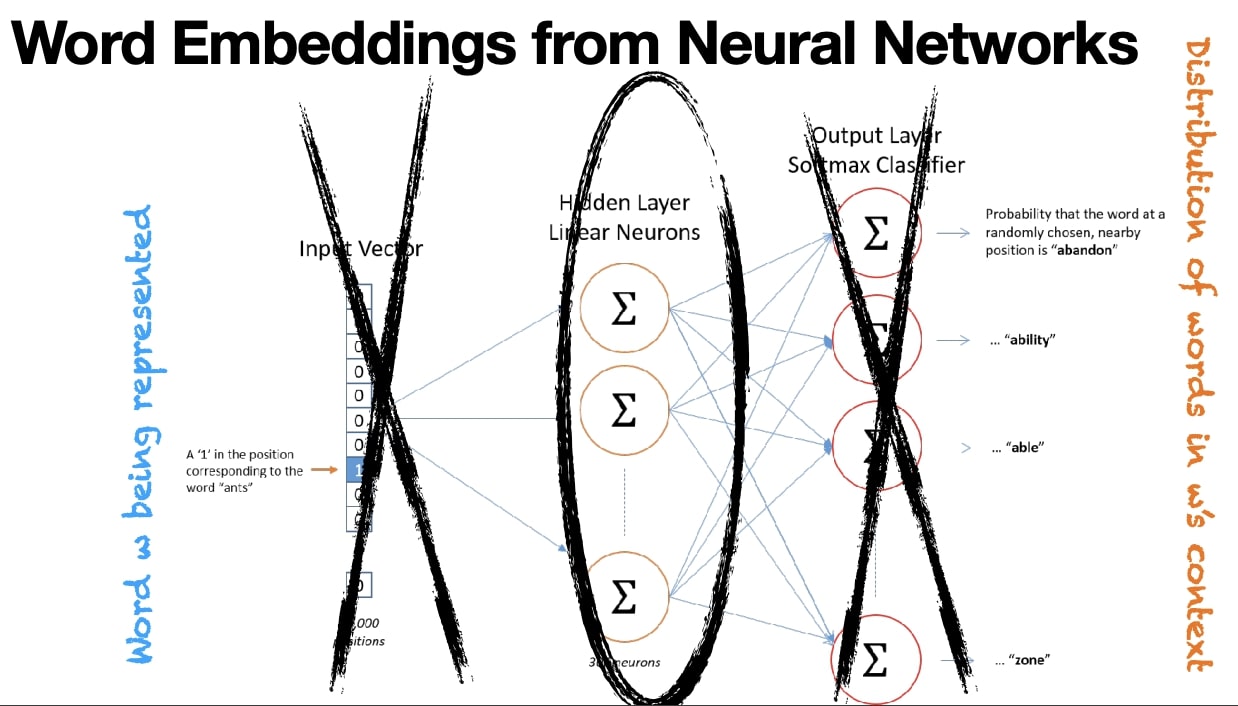

Word Embeddings

Word Embeddings can capture abstractions over input features, which can be trained with backpropagation. They are regarded as word representations, although they are derived from hidden states.

NNs vs. SVD

- Both of them use dimension reduction to obtain abstractions.

- Embeddings from NNs can become more powerful, but harder to interpret.

- More layers

- More non-linearity

- More complex training objectives